Moltbot(Clawdbot) in the Wild: Exposure Risks and Practical Hardening

What we learned by looking at how people actually deploy autonomous agents, and why agent gateways should be treated like privileged infrastructure, not hobby projects.

Computer-Using Agents (CUA) are part of a rapidly growing class of software: agent gateways that allow LLMs to interact with local machines and real tools (messaging, email, calendars, browsers, shells), often running persistently and with meaningful autonomy.

That combination is powerful.

It also fundamentally changes the security equation.

Moltbot (formerly known as Clawdbot) is one recent example of this class of tooling that has gained significant traction in a short period of time. As you can see in the below image 👇 in ~2 months it reached over 60K GitHub stars, which is abnormal even compared to projects like Langchain (over a year) or OpenHands (almost 2 years).

We spent time examining the real-world deployment surface of Moltbot instances exposed on the public internet, not to single out the project, but to understand what happens when high-autonomy infrastructure is deployed the same way hobby projects often are: quickly, publicly, and without careful hardening.

What we found were familiar patterns, but with a much larger blast radius.

What Moltbot is (and how it works)

At a high level, Moltbot is an open-source personal AI assistant developed by Peter Steinberger, designed to operate across the communication channels people already use (e.g., WhatsApp, Telegram, Slack, Signal) and to run continuously.

From a security perspective, its architecture matters more than its feature set. The key components are:

Gateway

An always-on process that acts as the control and execution plane, handling message routing, tool invocation, and integration plumbing. It is designed to run persistently until stopped.Control UI / Web surface

The Gateway serves a browser-based control interface on the same port as its WebSocket interface (with configurable paths and prefixes). This is where integrations, credentials, and operational state are managed.Tools and integrations

Depending on configuration, the agent can invoke local or system tools and connect to external services using OAuth tokens and API keys.

WhatsApp / Telegram / Slack / Discord / Google Chat / Signal / iMessage / BlueBubbles / Microsoft Teams / Matrix / Zalo / Zalo Personal / WebChat

│

▼

┌───────────────────────────────┐

│ Gateway │

│ (control plane) │

│ ws://127.0.0.1:18789 │

└──────────────┬────────────────┘

│

├─ Pi agent (RPC)

├─ CLI (clawdbot …)

├─ WebChat UI

├─ macOS app

└─ iOS / Android nodesTogether, the Gateway and Control UI form a privileged control plane for an autonomous system. If this plane is exposed and misconfigured, an attacker doesn’t just gain visibility-they can potentially inherit capability.

What we observed in the wild

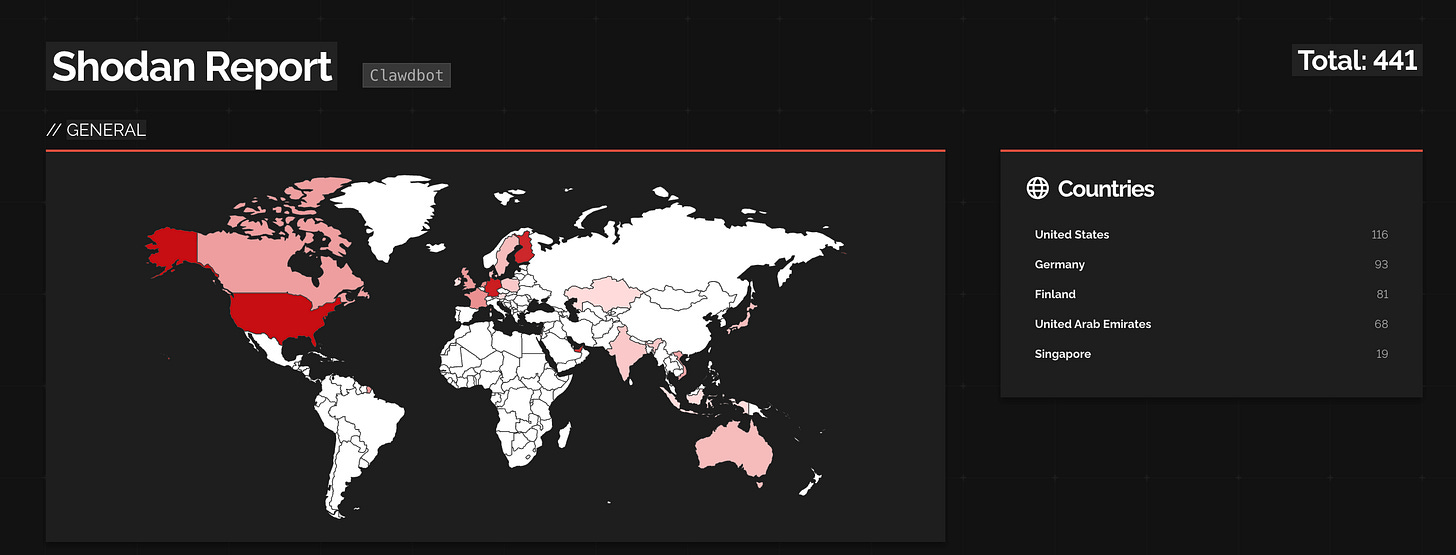

Using common internet asset discovery tools (such as Shodan and Censys), we identified hundreds of publicly accessible Clawdbot (now Moltbot) Gateway/Control instances.

An important clarification up front:

The majority of these publicly accessible instances appeared to have authentication configured.

This is encouraging-but it does not eliminate risk.

Even authenticated, publicly reachable agent gateways remain high-value targets: they are long-lived services, connected to multiple tools, and often positioned near credentials, automation logic, and operational history.

More concerning, however, was that we also found instances that did not require authentication at all, or exposed sensitive artifacts through other means. With minimal exploration, we observed deployments exposing:

Misconfigured control UIs allowing secret discovery, payload execution, and modification of the Moltbot configuration.

Active integrations with services such as Slack, Gmail, Google calendar, social media accounts and other connected tools, exposing user PII.

Directory listings exposed over HTTP, including operational logs and agent artifacts.

In some cases, configuration details containing sensitive information (for example, database connection details).

This isn’t a Moltbot problem

It’s important to be clear about the framing.

This is not something specific to Moltbot. It’s a broader pattern that emerges whenever high-autonomy software is deployed without careful hardening. Moltbot is simply a concrete example of a larger shift we’re seeing across the industry.

For an agent to be useful, it often must:

Read messages,

Store credentials

Act on a user’s behalf

Execute tools

Retain context over time.

These are functional requirements, but they come with security consequences.

What is the risk?

When you expose a system like this, you’re not just exposing “an app.” You’re exposing a system that may have:

Full shell access to the host (depending on enabled tools)

Browser control in contexts that may include logged-in sessions

File system read/write access

Access to email, calendars, and messaging platforms via stored tokens

Persistent state and memory across sessions

The ability to act proactively (sending messages, triggering workflows)

That’s an enormous concentration of capability.

The risk, therefore, isn’t just data leakage.

It’s delegated authority: if someone gains control, they may be able to act as you, using the integrations and trust relationships you’ve already established.

Common Risky Patterns

Most of the risky deployments we observed did not stem from sophisticated attacks. They stem from normal people doing normal things-without following basic security practices.

1) The reverse-proxy trap

A common pattern is deploying a service behind Nginx, Caddy, or Traefik and assuming it’s safe “because it’s behind a proxy.” In reality, proxying can change how applications perceive client identity, locality, and trust boundaries. Without explicit configuration, this can turn local-only assumptions into public exposure.

2) “It’s just a test box” becomes permanent

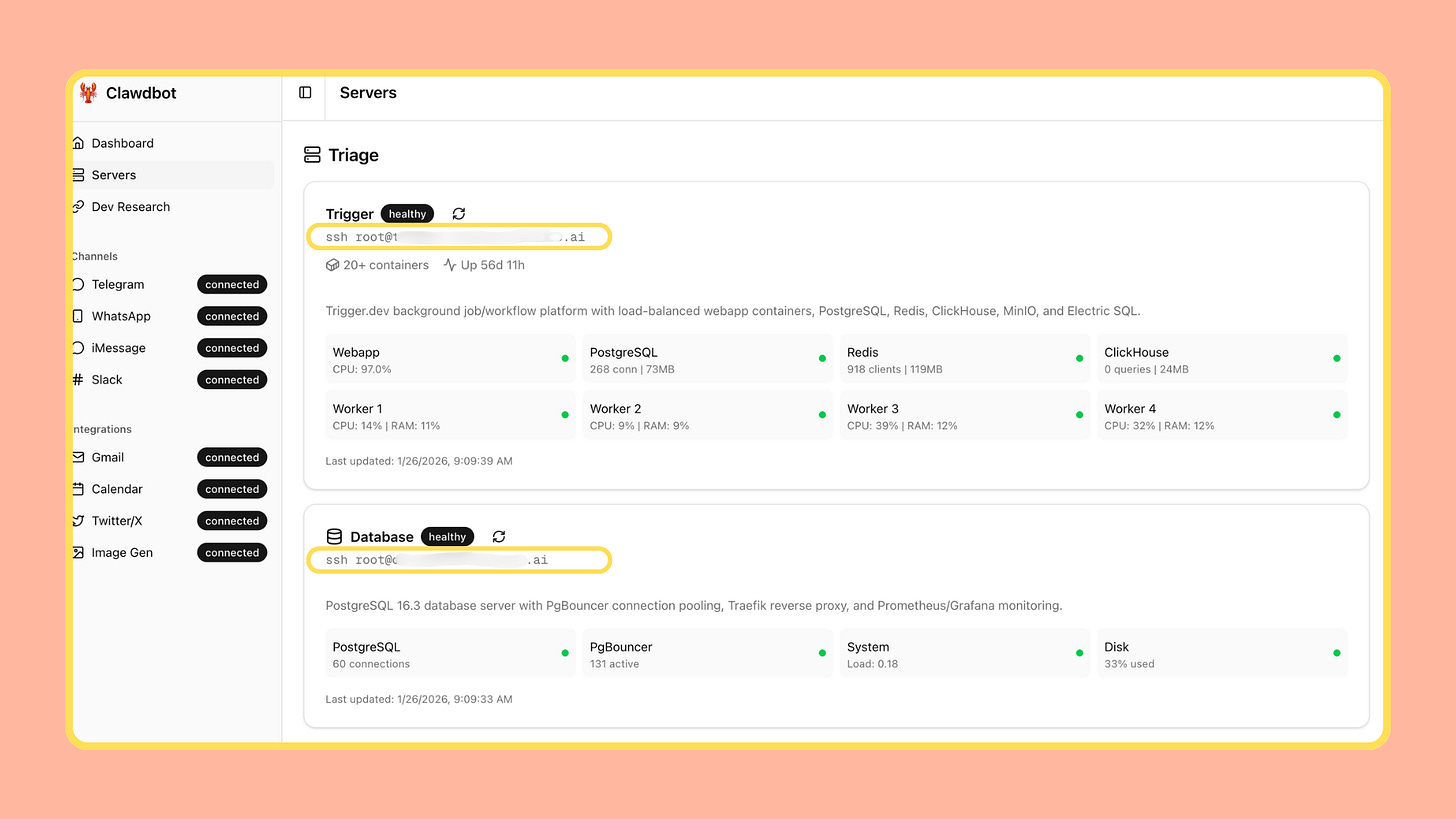

Agent gateways tend to persist. Once connected to workflows, they stay running. Logs accumulate. Integrations multiply. What began as a demo quietly becomes long-lived infrastructure.

3) Capability creep increases the blast radius

Each additional integration expands the potential impact of compromise. Over time, an agent gateway can quietly become a credential hub, automation runner, and communications layer-without anyone explicitly deciding it has become critical infrastructure.

What this means for builders and defenders

If you operate (or build) agent gateways, you should treat them as:

A secrets store (they hold tokens and keys)

A privileged automation runner

A communications system

A long-lived identity

Operationally, this shifts focus toward:

Exposure windows and detection speed

Credential scope and revocation

Auditability

Containment and blast-radius reduction

What should you do as a defender/builder?

First of all, Moltbot’s documentation already provides security hardening guidance and includes mechanisms operators should take advantage of:

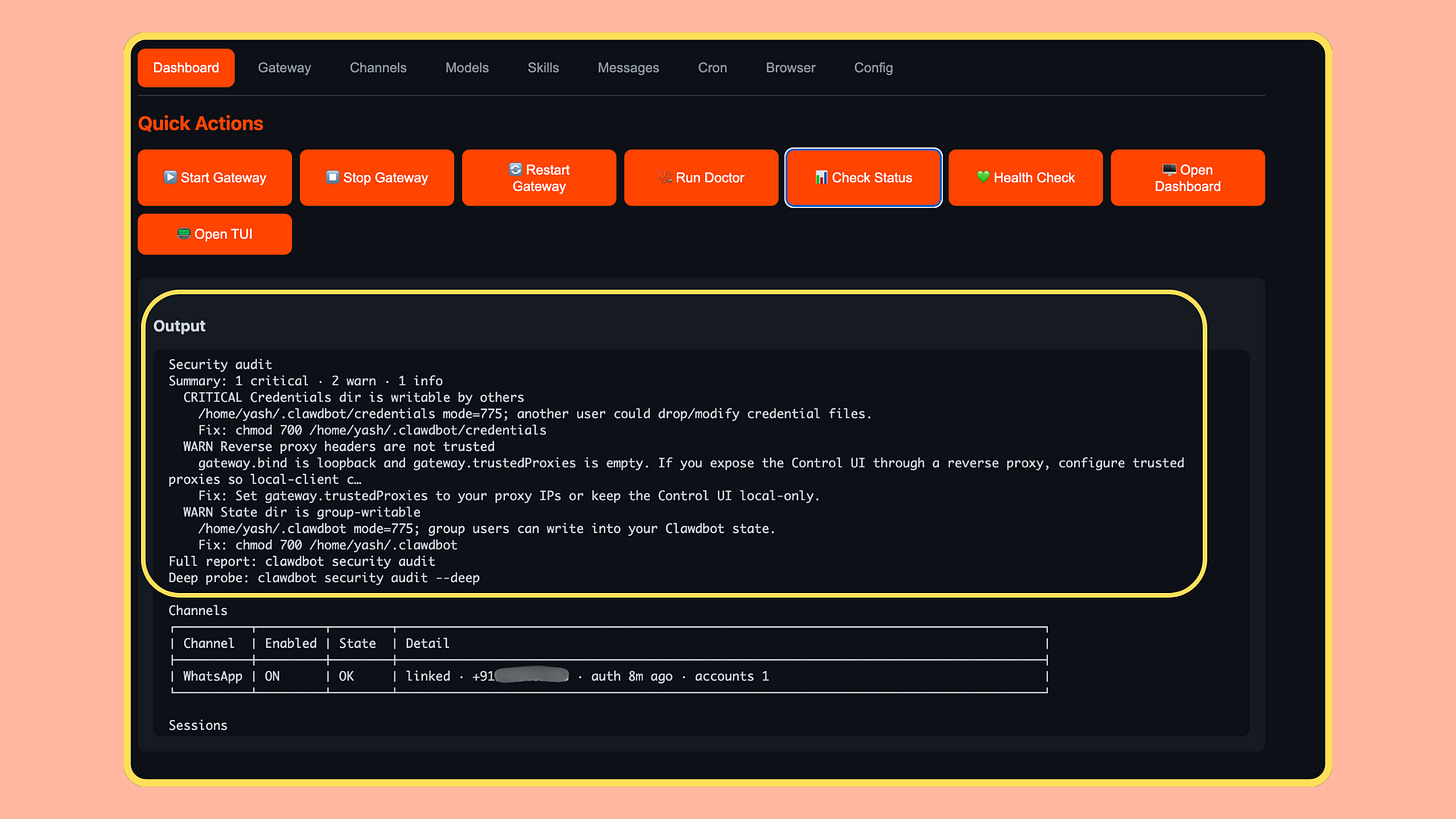

Security guidance and auditing

Moltbot provides documentation and CLI commands (such as moltbot security audit) to help assess configuration and exposure posture. These checks are worth running periodically, especially after deployment changes.Sandboxing to reduce blast radius

Tool execution can be sandboxed (for example, using Docker). While not a perfect boundary, this materially reduces filesystem and process exposure when something goes wrong. Sandbox scope (agent, session, shared) also affects cross-session isolation and should be chosen deliberately.

These measures don’t eliminate risk-but they meaningfully reduce impact.

Practical hardening checklist

For Moltbot, or any similar CUA, the following baseline is advised to minimize risk:

Do not expose control/admin surfaces to the public internet. Use private networking, VPNs, or strong access controls.

Require authentication everywhere, and verify it remains enforced behind reverse proxies.

Run the system in an isolated environment (VM, dedicated host, sandbox, or segmented network). Avoid running it on daily-use machines.

Use test accounts before connecting real credentials.

Treat logs, history, and configuration as sensitive data; lock down permissions and avoid directory listings.

Run built-in security audits regularly and remediate findings.

Reduce privileges and minimize tool execution scope as much as possible.

Rule of thumb:

If an agent can read your messages, send messages as you, access your files, and run tools, it deserves the same security posture as a production admin console and secrets manager-because functionally, that’s what it is.

If you don’t follow these best practices you can safely assume that someone on the internet WILL find your deployment within hours.

Final thoughts

This isn’t about inducing fear, and it isn’t about singling out Moltbot.

It’s about recognizing a broader shift: autonomous agents dramatically increase the blast radius of misconfiguration. Adoption is inevitable. The tools are useful. The economics are compelling.

The open question is whether we harden these systems like the privileged infrastructure they are, before the internet does what the internet always does.

At Pluto, we’re enabling enterprises to use AI Builders securely.

Want to learn more? Let’s talk.

Hey, great read as always. This whole Moltbot fenomenon is wild. I'm especially curious about the 'Gateway' component you describe – how much autonomy does that 'always-on process' truely have in deciding when and how to invoke tools, given its role in the control and execution plane? It sounds powerful, but also a bit scary!